A quick guide to enable S3 native state locking in Terraform 1.11.0

Boardgame

At the outskirts of our solar system, a mysterious planet might be hiding. In The Search for Planet X, each player takes on the role of an astronomer trying to locate the hidden planet, known as Planet X. It is a deduction game where players gather clues, and as more objects are discovered, they narrow down the possible locations of Planet X. For more information, visit: boardgamegeek

In August 2024, AWS introduced AWS S3 conditional writes, a feature that prevents objects from being overwritten when uploading data. This enhancement allowed Terraform to eliminate the need for consensus mechanisms to lock state file writing on S3 objects. Finally, this feature has been officially added to Terraform as General Availability (GA) in version v1.11.0 this week. This blog post provides a quick guide on enabling S3 native state locking in Terraform 1.11.0.

Terraform State Locking

Terraform supports various types of backends. If a backend supports state locking, Terraform will lock the state file for any operation that modifies it. Consider a scenario where one developer applies changes using Terraform, and the process takes a few minutes to create or modify resources. Meanwhile, another developer attempts to deploy new resources using the same state file. Without state locking, Terraform would allow both developers to write to the state file simultaneously, leading to potential corruption and inconsistencies. State locking prevents this by ensuring that only one operation can modify the state at a time, avoiding conflicts and maintaining data integrity.

Terraform and AWS S3 Backend

Terraform uses persisted state data to track the resources it manages. When using Terraform with AWS, the S3 backend is an option for storing state files. Previously, state locking for the AWS S3 backend relied on DynamoDB-based locking, where Terraform used DynamoDB to manage state status and control writes to the state file.

AWS has now introduced a new feature that enables Amazon S3 to support conditional writes. This allows S3 to natively manage how distributed applications with multiple clients update data concurrently across shared datasets. Each client can conditionally write objects, ensuring that one client does not overwrite data written by another. With this enhancement, Terraform no longer needs to use DynamoDB for state locking, it can rely on S3 itself.

Before Terraform v1.11.0

If you have used an earlier version of Terraform before v1.11.0, your Terraform backend block might look similar to the code block below. As shown, it includes a parameter to configure which DynamoDB table is used to store LockIDs:

terraform {

backend "s3" {

region = "eu-north-1"

bucket = "cloudynotes-infra"

key = "terraform.tfstate"

encrypt = true

dynamodb_table = "cloudynotes-infra-lock"

}

}

For more details about the Terraform S3 backend, check out the following resource: AWS S3 Backend

By Terraform v1.11.0

On February 27, 2025, the Terraform provider officially released support for S3 native state locking in General Availability (GA) with version v1.11.0.

To use this new feature, you must first upgrade to the latest version of Terraform, v1.11.0. If your Terraform configuration includes version constraints, keep in mind that this feature is only available in Terraform v1.11.0. Therefore, before upgrading, review any potential breaking changes when transitioning from your current version to v1.11.0. Once reviewed, update the version constarins in your Terraform block accordingly.

Then simply modify your backend block by removing the dynamodb_table parameter and replacing it with the new parameter called use_lockfile. The code block below provides an example:

terraform {

backend "s3" {

region = "eu-north-1"

bucket = "cloudynotes-infra"

key = "terraform.tfstate"

encrypt = true

use_lockfile = true

# dynamodb_table = "cloudynotes-infra-lock"

}

}

When you run terraform init, Terraform detects the change in your backend configuration, causing the initialization to fail:

> terraform init

Initializing the backend...

Initializing modules...

╷

│ Error: Backend configuration changed

│

│ A change in the backend configuration has been detected, which may require migrating existing state.

│

│ If you wish to attempt automatic migration of the state, use "terraform init -migrate-state".

│ If you wish to store the current configuration with no changes to the state, use "terraform init -reconfigure".

In this case, as indicated by the terraform init output, you should reinitialize Terraform using the -reconfigure option:

> terraform init -reconfigure

Initializing the backend...

Backend configuration changed!

Terraform has detected that the configuration specified for the backend

has changed. Terraform will now check for existing state in the backends.

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing modules...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Reusing previous version of hashicorp/time from the dependency lock file

- Reusing previous version of hashicorp/local from the dependency lock file

- Using previously-installed hashicorp/local v2.5.2

- Using previously-installed hashicorp/aws v5.89.0

- Using previously-installed hashicorp/time v0.12.1

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

That’s it! You’ve successfully updated your backend to support AWS S3 native state locking, eliminating the need for DynamoDB to store LockIDs. You can verify your configuration by running terraform plan, which should confirm that no changes are detected.

> terraform plan

No changes. Your infrastructure matches the configuration.

Terraform has compared your real infrastructure against your configuration and found no differences, so no changes are needed.

Verification

To test state locking with the new configuration, I added a sleep block for 60 seconds. While Terraform is applying the changes and sleeping, I attempt to run Terraform in a separate session to deploy a new resource. As expected, Terraform’s state locking prevents any overwriting, ensuring that the other session cannot modify the state file while it is still in use.

resource "null_resource" "previous" {

depends_on = [time_sleep.wait_60_seconds]

}

resource "time_sleep" "wait_60_seconds" {

create_duration = "60s"

}

session -1:

> terraform apply

time_sleep.wait_60_seconds: Still creating... [10s elapsed]

time_sleep.wait_60_seconds: Still creating... [20s elapsed]

time_sleep.wait_60_seconds: Still creating... [30s elapsed]

time_sleep.wait_60_seconds: Still creating... [40s elapsed]

time_sleep.wait_60_seconds: Still creating... [50s elapsed]

session -2:

> terraform apply

╷

│ Error: Error acquiring the state lock

│

│ Error message: operation error S3: PutObject, https response error StatusCode: 412, RequestID: RFGCMN948YRCHJG9, HostID:

│ xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx, api error PreconditionFailed: At least one of the

│ pre-conditions you specified did not hold

│ Lock Info:

│ ID: 66f0aed7-a8cf-8ea2-4bea-a8f4c52b324d

│ Path: cloudynotes-infra/terraform.tfstate

│ Operation: OperationTypeApply

│ Who: cloudynotes

│ Version: 1.11.0

│ Created: 2025-03-02 12:40:12.241858 +0000 UTC

│ Info:

│

│

│ Terraform acquires a state lock to protect the state from being written

│ by multiple users at the same time. Please resolve the issue above and try

│ again. For most commands, you can disable locking with the "-lock=false"

│ flag, but this is not recommended.

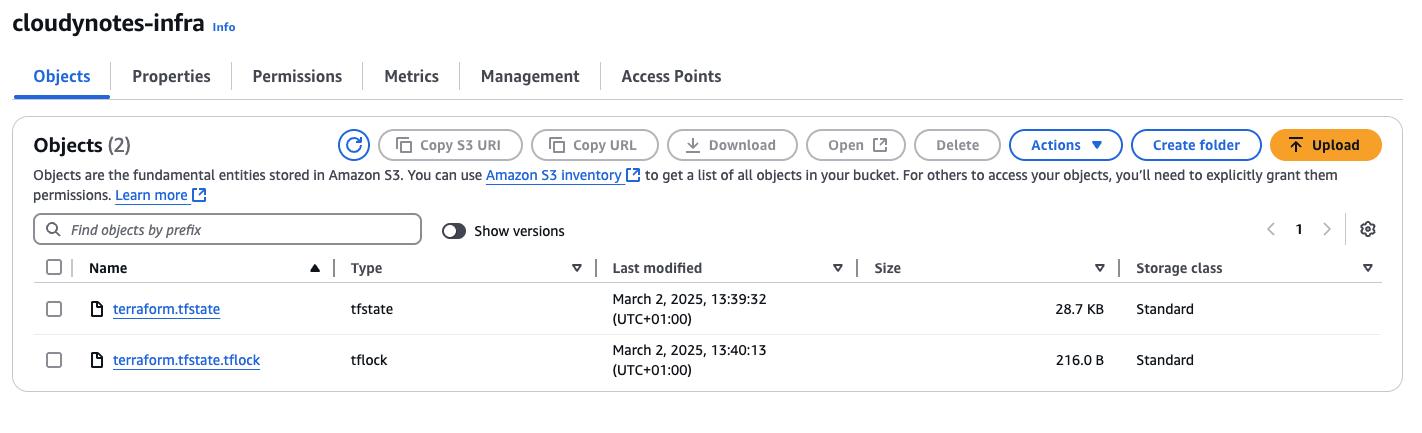

.tflock file appears in the bucket:

Clean up

Since we no longer need DynamoDB in our Terraform backend, we can remove it. The cleanup process for DynamoDB depends on your initial infrastructure setup. If you used the Cloud Posse repository to implement your AWS Terraform state backend, you need to set dynamodb_enabled to false.

module "remote_state" {

source = "cloudposse/tfstate-backend/aws"

version = "1.5.0"

namespace = "cloudynotes"

name = "infra"

terraform_backend_config_file_name = "backend.tf"

terraform_state_file = "terraform.tfstate"

force_destroy = false

# Disable DynamoDB delete protection

deletion_protection_enabled = false

# Removed DynamoDB

dynamodb_enabled = false

}

If your DynamoDB table has delete protection enabled, you must first disable it before attempting to delete the table.